Did you ever have a situation where you felt like converting

a Sql Server Analysis Services in Multidimensional Mode to Tabular Mode ?

I thought of trying this out after reading this article. In

this article from Microsoft, there is Caution note as below.

I thought let me try what happens if I changed the

deploymentMode and see whether this changes my Multidimensional Mode to Tabular

Mode. Of course I tried this on my dev

instance of SSAS 2016.

So first things first –

- Backed up my one and only one worldwide SSAS database.

- Detached the database

- Checked the server mode in the SSAS properties as shown below.

Disconnected from my SSAS instance. - Went to location -- C:\Program Files\Microsoft SQL Server\MSAS13.DEVELOPER\OLAP\Config

- Made a copy of the MSMDSRV.INI file

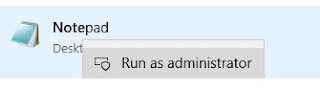

- Then opened notepad as Administrator as shown below

- Then from notepad opened the MSMDSRV.INI file

- Changed the deploymentmode from 0 to 2 as shown below

- Restarted the Analysis Services as shown below.

- Then connected to the SSAS instance in Management Studio and checked the properties.

- The Server Model is showing as Tabular as shown below

Below are the steps I followed to convert the

multidimensional SSAS model to Tabular Model

I know I cannot reattach the worldwide multidimensional model database I saved earlier.

More in a next blog whether I have been successful or not.